SAS Questions Big Data Approaches of SAP, Oracle

SAS chose its Premier Business Leadership event in Las Vegas, attended by more than 600 senior-level attendees from the public and private sectors, as the forum to differentiate itself from competitive Big Data offerings from SAP and Oracle. Those two software giants recently hosted shows of their own, heralding their respective SAP HANA and Oracle Exadata solutions.

SAP is pushing HANA’s in-memory capabilities as the best way in which to perform data analytics. Similarly, Oracle is promoting its Exalytics, Exalogic and Exadata engineered systems as the rightful home of Big Data sets and as a way to rapidly analyze them. With 26 terabytes of combined RAM and flash cache, Exadata presents a formidable case. Mark Hurd, Oracle's president, claims the latest generation of Exadata is 20 times faster on report generation and some other workloads than the previous version.

These systems work by taking vast data sets that normally sit on relatively slow disk drives and placing them on much faster memory or in some kind of flash format that is also about 100 times speedier than disk. Thus the processor doesn’t have to be put on hold while it waits for information to be retrieved from disk, so it's able to perform more computations.

Data Analytics: Not Just Number Crunching

This is a logical argument. But SAS is having none of it. It contends that SAP and Oracle are merely number crunchers, albeit fantastically fast devourers of digits. But according to SAS, their approaches don’t provide true analytics.

“These in-memory approaches like SAP HANA are basic business intelligence on steroids,” said Jim Davis, senior vice president and chief marketing officer at SAS. “But you can’t do high-end analytics on them as they are database-bound systems.”

Davis' point is that databases harness technologies such as SQL which are designed for querying and reporting. They can do a whole lot of summarizing in a super-fast manner but can’t really slice and dice data as is required in full-scale analytics.

Greg Schulz, an analyst with StorageIO Group concurs. “Exadata is probably one of the most misunderstood systems out there,” he said. “It does only one thing, and that is to function as a database back-end SQL off-load engine for Oracle databases.”

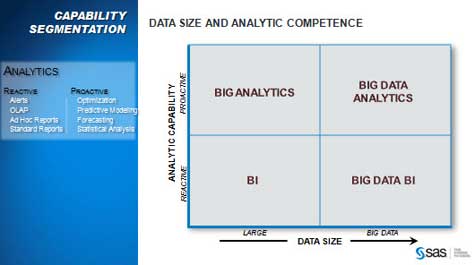

To help users visualize this train of thought, SAS has drawn up a chart with four quadrants. Along the “X” axis goes the total volume of data to be addressed while the “Y” axis deals with the degree of business intelligence (BI) and analytics capability. SAS defines BI as basic querying and reporting, while analytics deals with more advanced areas such as neural network modeling, statistical analysis and correlation. These latter categories, claims SAS, are not strictly the province of traditional BI.

SAS says that while Oracle and SAP provide Big Data BI, SAS provides true Big Data analytics via its analytics software optimized to run on a newly introduced backbone of massively parallel processing (MPP) hardware.

Beyond the Database

Like Oracle and SAP, SAS has adopted its own in-memory approach. It has architected its analytics platform so that it can process a lot more data in a shorter space of time. But unlike its competitors, SAS does not do this processing in the database. Rather, it sits on top of databases and data warehouses to provide insight to what’s inside.

“SAS is not an in-memory database; it is an in-memory analytics platform,” said Davis.

To further make the case, SAS points to IDC’s recent report on advanced analytics. IDC notes that SAS owns a 35 percent share in that market, with IBM second at 15 percent and Microsoft third at 2 percent. Neither SAP nor Oracle are in the top 10.

“Databases are not good for statistical analysis,” said Jim Goodnight, CEO of SAS. “Exadata and HANA, being SQL-based transactional databases, may be very fast at simple query and reporting, but they don’t perform advanced analytics.”

While business intelligence tends to focus on individual transactions, analytics uses a broader set of data to predict trends and anticipate needed changes or opportunities. A typical use of standard BI would be examining sales databases to determine top sales people, top stores and current best-selling items. In contrast, analytics might examine customer behaviors and attempt to determine new products that would appeal to consumers.

“Predictive analytics can ask questions like ‘Who are the 500 people most likely to terminate service next month,' something you just can’t do in SQL,” Goodnight said.

Can Analytics Be Commoditized?

There is a movement afoot to commoditize many aspects of IT. Hardware and storage, for example, have largely been commoditized. Vendors are finding it harder to convince companies to use proprietary hardware. With software too, open-source developers have done a good job of commoditizing various applications and operating systems.

Advanced analytics, however, remains specialized — and SAS is confident this trend will continue.

“The art and science of developing insights can’t be commoditized,” said David Pope, principal solutions architect for the High Performance Analytics Practice at SAS.

In addition to software, an underlying hardware infrastructure is required – some, though not all, of which can be commoditized. Even more crucial are data scientists, the in-demand professionals who ensure that data models are correctly constructed and results are realistic. SAS is attempting to position itself as the vendor of choice for data scientists.

Drew Robb is a freelance writer specializing in technology and engineering. Currently living in California, he is originally from Scotland, where he received a degree in geology and geography from the University of Strathclyde. He is the author of Server Disk Management in a Windows Environment (CRC Press).

Drew Robb is a writer who has been writing about IT, engineering, and other topics. Originating from Scotland, he currently resides in Florida. Highly skilled in rapid prototyping innovative and reliable systems. He has been an editor and professional writer full-time for more than 20 years. He works as a freelancer at Enterprise Apps Today, CIO Insight and other IT publications. He is also an editor-in chief of an international engineering journal. He enjoys solving data problems and learning abstractions that will allow for better infrastructure.